- TECH4SSD

- Posts

- Is AI stealing your creativity — or just remixing it like every artist before?

Is AI stealing your creativity — or just remixing it like every artist before?

While artists are suing AI companies for billions, innovation experts argue we're witnessing the next evolution of remix culture. Who's right?

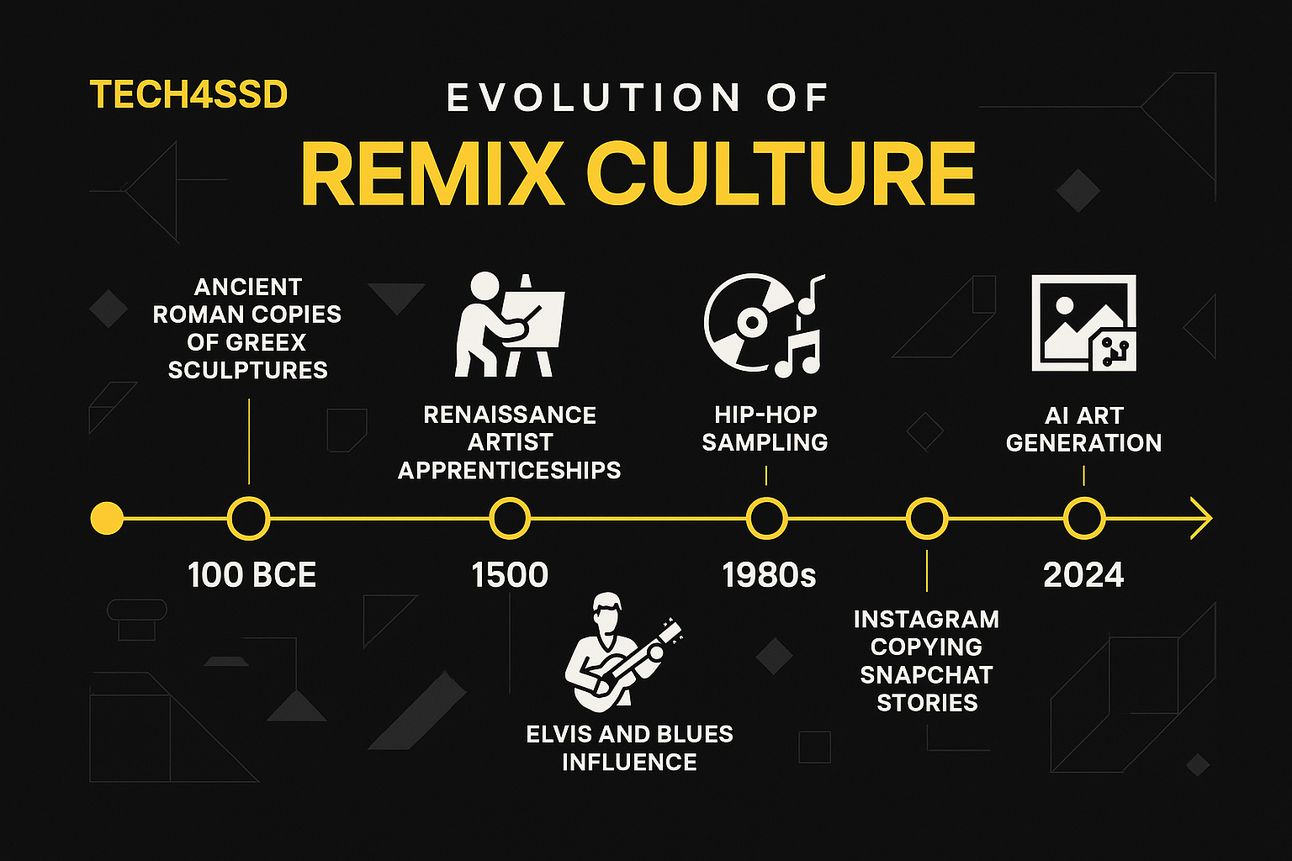

The uncomfortable truth: Every creative breakthrough in history has been a remix. From Elvis borrowing from Black blues artists to Instagram copying Snapchat Stories — innovation has always been about recombination, not pure invention.

But AI changes the game. When Stable Diffusion can analyze millions of artworks and generate new pieces in seconds, are we looking at the ultimate creative tool or the most sophisticated theft machine ever built?

🚀 Partner Spotlight

Unlock the Power of AI With the Complete Marketing Automation Playbook

Discover how to reclaim your time and scale smarter with AI-driven workflows that actually work. You’ll get frameworks, strategies, and templates you can put to use immediately to streamline and supercharge your marketing:

A detailed audit framework for your current marketing workflows

Step-by-step guidance for choosing the right AI-powered automations

Pro tips for improving personalization without losing the human touch

Tools and templates to speed up implementation

Built to help you automate the busywork and focus on work that actually makes an impact.

Is AI Stealing or Inspiring? The Debate Around Originality & Remix Culture

By Tech4SSD Team

If AI trains on millions of artworks, is it stealing — or remixing like every artist before it? Let's unpack the new rules of creativity.

The creative world is experiencing its most significant upheaval since the invention of photography. Artificial intelligence can now generate stunning artwork in seconds, compose symphonies, and write compelling prose. But beneath this technological marvel lies a contentious question dividing the creative community: Is AI a revolutionary tool for democratizing creativity, or an elaborate theft machine pillaging human artistic expression?

The debate has reached fever pitch. Artists like Kelly McKernan, whose distinctive style has been replicated thousands of times by AI systems without permission, argue their life's work is being stolen and commoditized [1]. Innovation experts like Shawn Kanungo contend AI is simply the latest evolution in humanity's long tradition of remix culture, where all creativity builds upon what came before [2].

This isn't just academic discussion. With the AI art market exploding and legal battles mounting, the outcome will reshape how we define creativity, ownership, and artistic value in the digital age. The stakes couldn't be higher for creators, technologists, and society.

Section 1: The Remix Culture We Already Live In

To understand whether AI represents theft or inspiration, we must examine the creative ecosystem that existed long before algorithms entered the picture. The uncomfortable truth is that pure originality — creating something entirely from nothing — has always been a myth.

Music's Foundation in Sampling and Borrowing

The music industry provides the clearest example of how remix culture has shaped artistic expression for decades. Hip-hop, one of the most influential musical movements of the past fifty years, was built entirely on sampling — taking pieces of existing recordings and transforming them into something new.

Consider country music's evolution, which emerged from the fusion of African banjo traditions brought by enslaved people and British folk melodies carried by immigrants [3]. What we now consider "authentic" country music is actually a cultural remix that took centuries to develop. The banjo itself, now synonymous with American folk music, originated in West Africa and was transformed through cultural exchange.

Modern pop music continues this tradition. Lady Gaga openly channels David Bowie, Madonna, and Queen. Missy Elliott's groundbreaking "Get Ur Freak On" masterfully combines German techno, Japanese musical intros, and Punjabi beats into something entirely new yet clearly derivative [4]. These artists aren't considered thieves — they're celebrated as innovators who understand that creativity is fundamentally about recombination.

Visual Art's History of Appropriation

Visual arts have an equally rich tradition of borrowing and transformation. Ancient Romans created copies of Greek sculptures, often improving upon the originals. During the Renaissance, artists routinely copied masters as part of training, and many of history's greatest works were commissioned pieces following strict guidelines and incorporating existing iconography.

The twentieth century saw this tradition formalized through movements like Pop Art. Roy Lichtenstein built his career reproducing comic book frames as high art. Andy Warhol's Campbell's Soup Cans and Marilyn Monroe portraits were direct appropriations of existing commercial and photographic imagery. These works are now considered masterpieces precisely because they demonstrated how context and presentation can transform meaning.

Digital Culture and Platform Evolution

The internet age has accelerated remix culture exponentially. Memes represent perhaps the purest form of collaborative creativity, where images, phrases, and concepts evolve through countless iterations across millions of users.

Even our most celebrated tech platforms are built on remix principles. Instagram borrowed Snapchat's Stories feature, which was then copied by Facebook, YouTube, and TikTok. This iterative improvement through borrowing is considered standard practice in tech, not theft.

This historical context is crucial for understanding the AI debate. When critics argue AI is fundamentally different because it "steals" from existing works, they're applying a standard that would invalidate much of human creative history. The question isn't whether AI builds on existing work — all creativity does. The question is whether AI's method crosses ethical or legal boundaries that human creativity has traditionally respected.

Section 2: How AI 'Learns' from Human Work

Understanding the technical process behind AI creativity is essential for evaluating whether it constitutes theft or legitimate inspiration. The way AI systems learn from human work is both more complex and more analogous to human learning than many critics acknowledge.

The Training Process Demystified

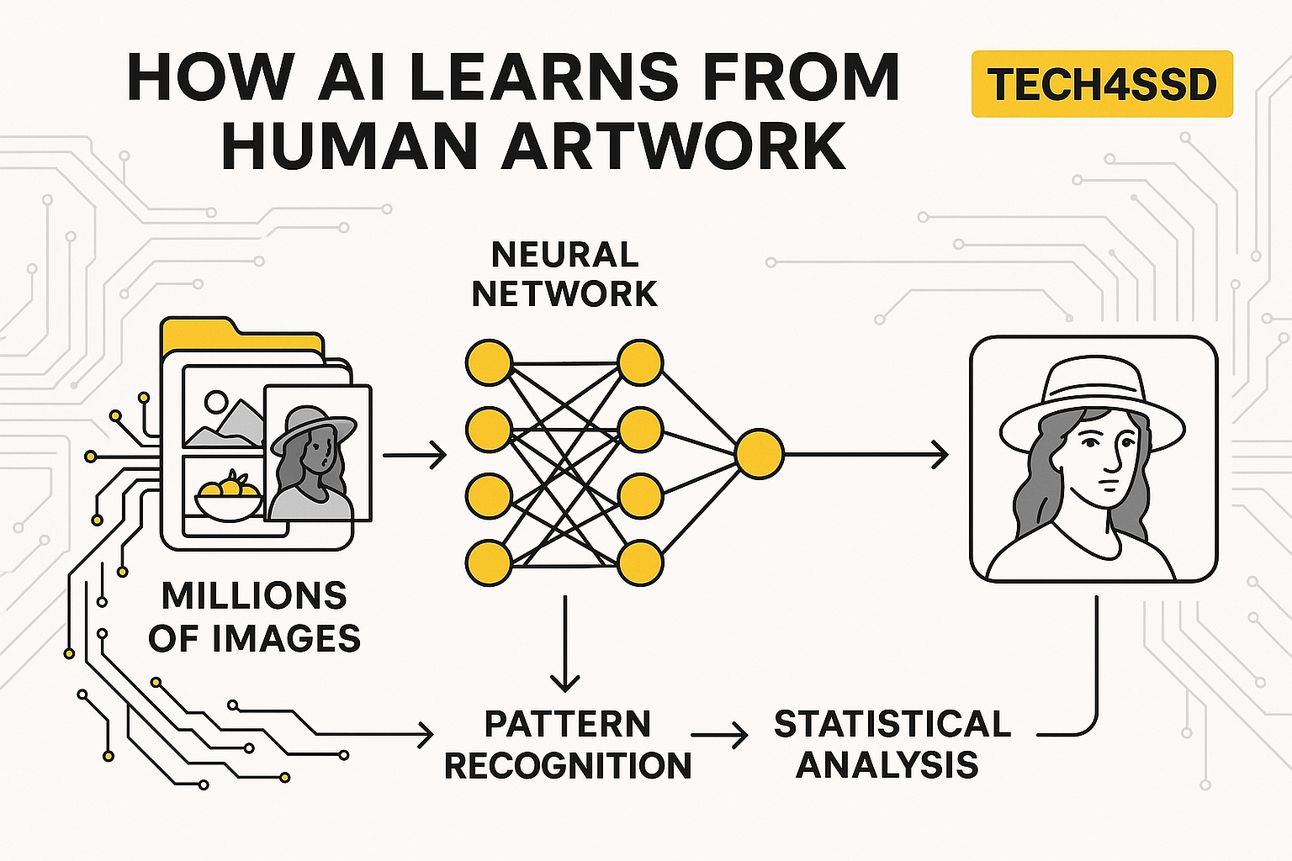

Modern AI art generators like Stable Diffusion, Midjourney, and DALL-E are trained on massive datasets containing millions of images paired with descriptive text. During training, the AI doesn't store these images in any recognizable form. Instead, it learns statistical patterns about how visual elements relate to textual descriptions [5].

The process is analogous to how a human art student might study thousands of paintings to understand how different artists handle light, composition, and color. The student doesn't memorize each painting pixel by pixel, but rather internalizes patterns and techniques that inform their own creative work. Similarly, AI systems learn abstract relationships between concepts rather than copying specific images.

When you prompt an AI to create "a sunset in the style of Van Gogh," the system doesn't retrieve a Van Gogh painting and modify it. Instead, it generates new pixels based on its learned understanding of what "sunset" and "Van Gogh style" mean in visual terms. The resulting image may capture Van Gogh's characteristic brushwork patterns and color palette, but it's created from scratch using statistical models.

The Scale and Legal Implications

The datasets used to train AI systems are unprecedented in scope. LAION-5B, one of the largest publicly available datasets, contains over 5 billion image-text pairs scraped from the internet [6]. This includes everything from professional artwork and photography to memes, screenshots, and amateur photos posted on social media.

The sheer scale raises important questions about consent and compensation. Unlike human artists who might study a few hundred works during education, AI systems analyze millions of images without explicit permission from creators. This difference in scale is often cited as a key distinction that makes AI training ethically problematic.

The ongoing legal battle between artists and AI companies provides crucial insight into how courts are interpreting these processes. In the landmark case Andersen v. Stability AI, artists argue that Stable Diffusion contains "compressed copies" of their artwork, making every generated image a derivative work that infringes copyright [7].

Judge William Orrick's ruling in August 2024 allowed this theory to proceed, stating that AI systems may facilitate copyright infringement by design [8]. However, the central question of whether AI training constitutes fair use remains unresolved.

Understanding these technical realities is crucial for developing informed opinions about AI creativity. The technology is neither the revolutionary breakthrough that some proponents claim nor the simple theft machine that some critics describe. It's a sophisticated system that learns patterns from existing work to generate new content, much like human artists do, but at unprecedented scale and speed.

Section 3: When Does Inspiration Become Theft?

The line between inspiration and theft has always been subjective, but AI has forced us to examine this boundary with unprecedented precision. What distinguishes legitimate artistic influence from problematic appropriation?

Artist Perspectives on the Theft Debate

The creative community's response to AI has been far from uniform, but many artists express deep concern about how their work is being used without consent. Kelly McKernan, one of the lead plaintiffs in the Stability AI lawsuit, discovered that their name had been used over 12,000 times in public prompts on Midjourney [9]. The resulting images bore striking similarities to McKernan's distinctive style, which blends Art Nouveau influences with science fiction themes.

"It just got weird at that point," McKernan explained. "It was starting to look pretty accurate, a little infringe-y. I can see my hand in this stuff, see how my work was analyzed and mixed up with some others' to produce these images" [10]. This personal violation — seeing one's artistic voice replicated and commoditized without permission — represents the emotional core of the theft argument.

Digital artist Greg Rutkowski found his name being used in AI prompts so frequently that it became difficult to find his original work in Google searches, as AI-generated images in his style dominated the results [11]. The irony is stark: an artist's success and distinctive style become the very qualities that make them vulnerable to AI replication.

However, not all artists view AI as theft. Some see it as a powerful tool that can augment human creativity when used ethically. Artist and researcher Mario Klingemann has embraced AI as a collaborative medium, arguing that the technology can help artists explore new aesthetic territories impossible to reach through traditional methods [12].

Legal Gray Areas and Fair Use

The legal framework surrounding AI creativity remains frustratingly unclear, with courts struggling to apply copyright law designed for human creators to algorithmic systems. The concept of fair use, which allows limited use of copyrighted material for purposes like criticism, comment, or transformation, is central to this debate.

Traditional fair use analysis considers four factors: the purpose and character of the use, the nature of the copyrighted work, the amount used, and the effect on the market for the original work. Applying these factors to AI training reveals the complexity of the issue.

The transformative nature of AI-generated content supports a fair use argument. AI systems don't reproduce existing works directly but create new images based on learned patterns. However, the commercial nature of many AI systems complicates this analysis. Companies like Stability AI and Midjourney profit from their AI models, which were trained on copyrighted works without compensation to original creators.

Style Imitation vs. Content Copying

One of the most nuanced aspects involves the distinction between imitating an artistic style and copying specific content. Copyright law has traditionally protected specific expressions but not general styles or techniques. You can't copyright the impressionist style, but you can copyright a specific impressionist painting.

AI systems primarily learn and replicate styles rather than copying specific content. When prompted to create an image "in the style of Picasso," an AI generates new content that incorporates Picasso's characteristic techniques — cubist fragmentation, bold colors, geometric forms — without reproducing any specific Picasso painting.

This distinction matters legally and ethically. Style imitation has been accepted throughout art history as a legitimate form of learning and expression. However, AI's ability to replicate styles with unprecedented accuracy and speed raises new questions. When an AI can generate hundreds of images in a specific artist's style in minutes, does this cross a line from inspiration to exploitation?

The question of when inspiration becomes theft doesn't have simple answers, but it requires honest engagement with the concerns of human creators whose livelihoods and artistic identities are at stake. The goal should be developing frameworks that allow AI to enhance human creativity while respecting the rights and contributions of the artists whose work makes that enhancement possible.

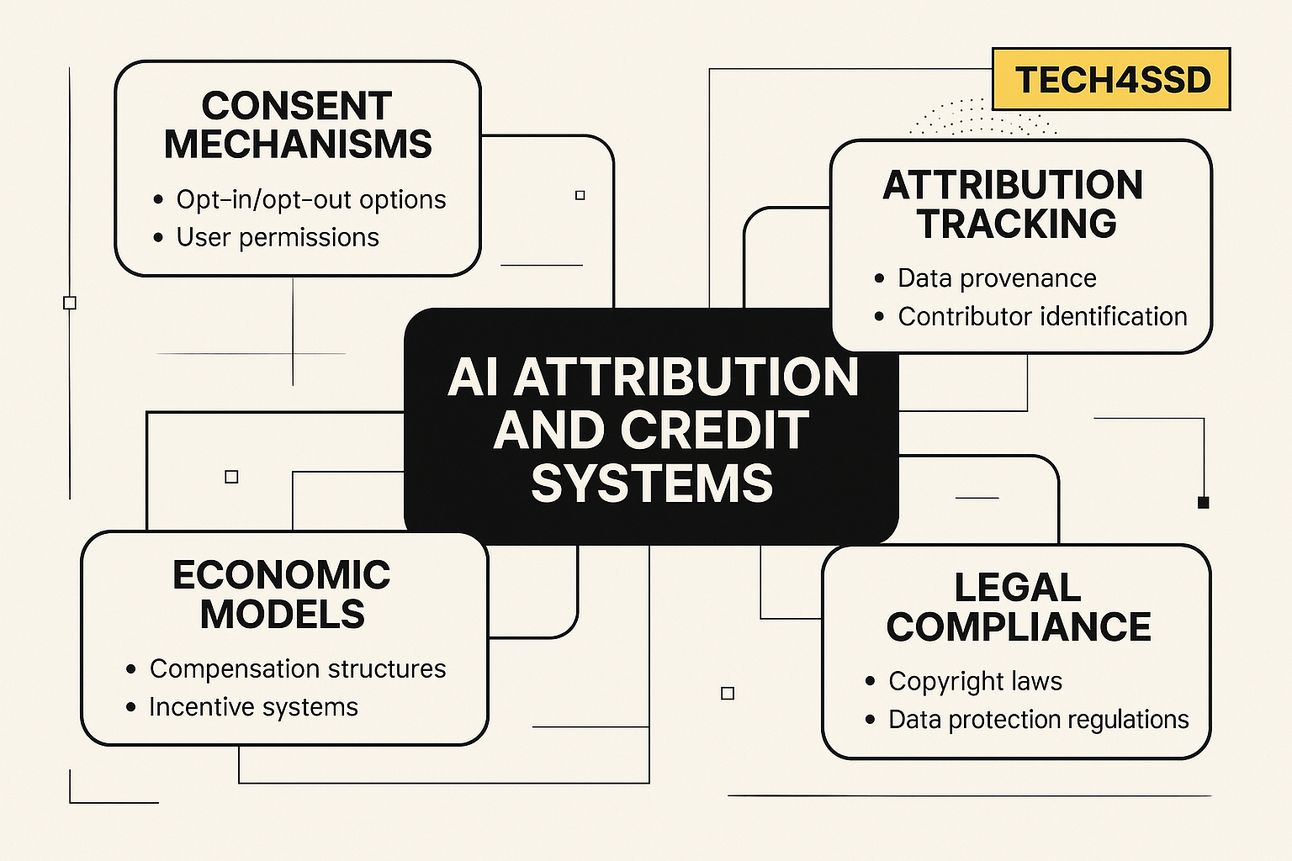

Section 4: The Ethics of Attribution & Credit

As AI systems become increasingly sophisticated at generating human-like creative content, the question of attribution has evolved from a technical challenge to a moral imperative. How do we ensure that human creators whose work enables AI creativity receive appropriate recognition and compensation?

The Attribution Challenge in AI Systems

Current AI systems operate as black boxes when it comes to attribution. When Stable Diffusion generates an image, there's no mechanism to identify which of the millions of training images most influenced the output. This opacity isn't necessarily intentional — it's a byproduct of how neural networks process information through layers of mathematical transformations that obscure direct connections between input and output.

This technical limitation has profound ethical implications. Unlike human artists who can cite their influences or musicians who must clear samples, AI systems provide no pathway for crediting the creators whose work enabled their capabilities. The result is a system that benefits from human creativity while providing no mechanism for recognition or compensation.

Some researchers are working on developing "influence tracking" systems that could identify which training data most significantly impacted specific AI outputs [13]. However, the technical challenges are immense, and current approaches can only provide rough approximations rather than precise attribution.

Tools Emerging to Detect and Train Ethically

The growing awareness of attribution and consent issues has spurred development of new tools and platforms designed to address these concerns:

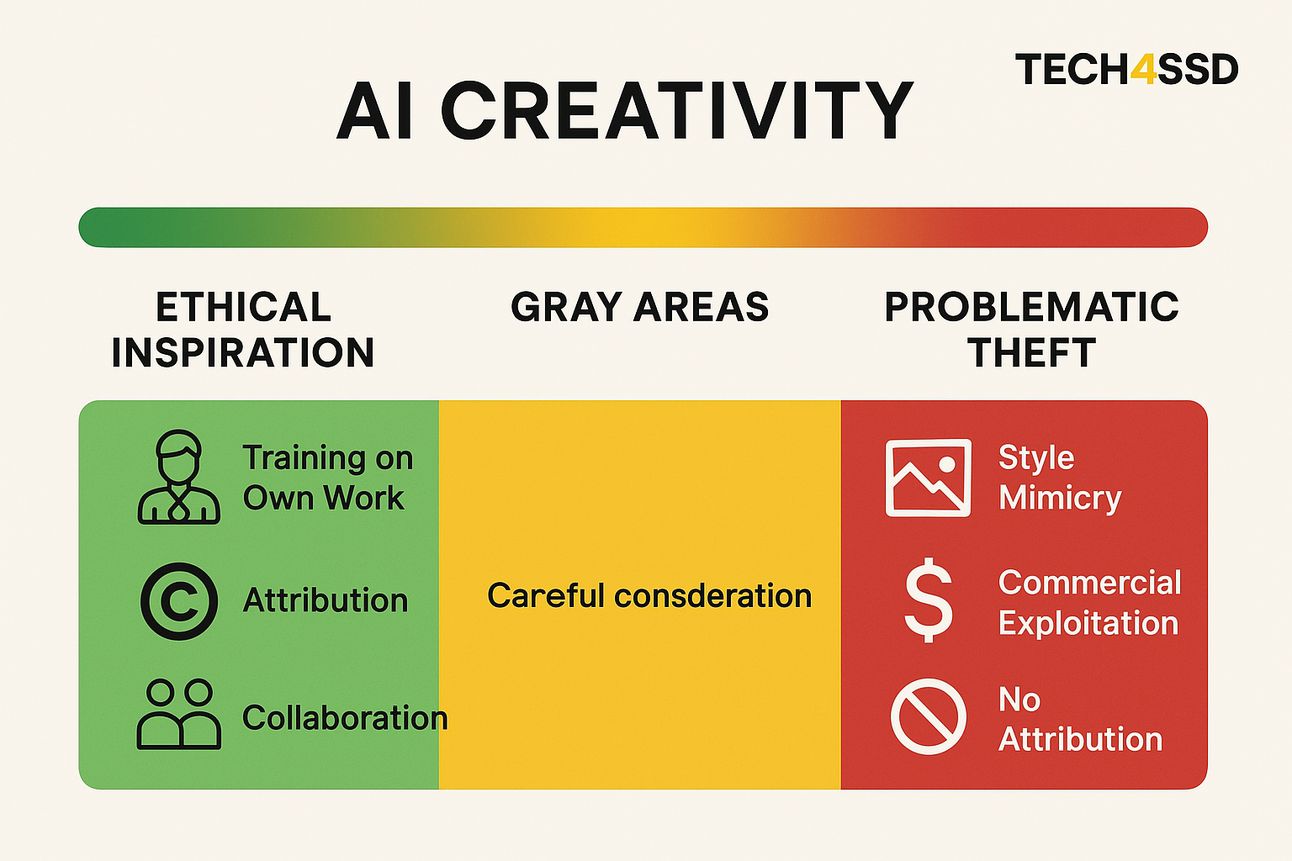

Ethical AI Platforms: Services like Adobe Firefly train exclusively on licensed content, ensuring that all training data is used with permission [14]. While this approach limits the diversity of training data, it provides clear ethical guidelines for AI development.

Artist Consent Platforms: Tools like CreateBase allow artists to specify how their work can be used for AI training and automatically handle licensing and compensation [15].

Detection Tools: Services like Glaze and Nightshade help artists protect their work from unauthorized AI training by adding imperceptible modifications that disrupt AI learning algorithms [16].

Transparency Requirements: The EU AI Act requires AI systems to clearly identify AI-generated content and provide information about their training data [17].

Economic Models for Fair Compensation

Several economic models have been proposed for ensuring artists benefit from AI development:

Training Royalties: Artists would receive payment when their work is included in AI training datasets.

Output Royalties: Artists would receive ongoing payments when AI systems generate content that draws from their work.

Collective Licensing: Industry-wide systems would pool compensation and distribute it to artists based on usage.

Platform Revenue Sharing: AI companies would dedicate a percentage of revenue to compensating artists whose work enabled their systems.

The challenge of attribution in AI systems reflects broader questions about how we value and compensate creativity in the digital age. As AI capabilities continue to advance, developing fair and practical attribution systems will be crucial for maintaining a sustainable creative ecosystem that benefits both human artists and AI developers.

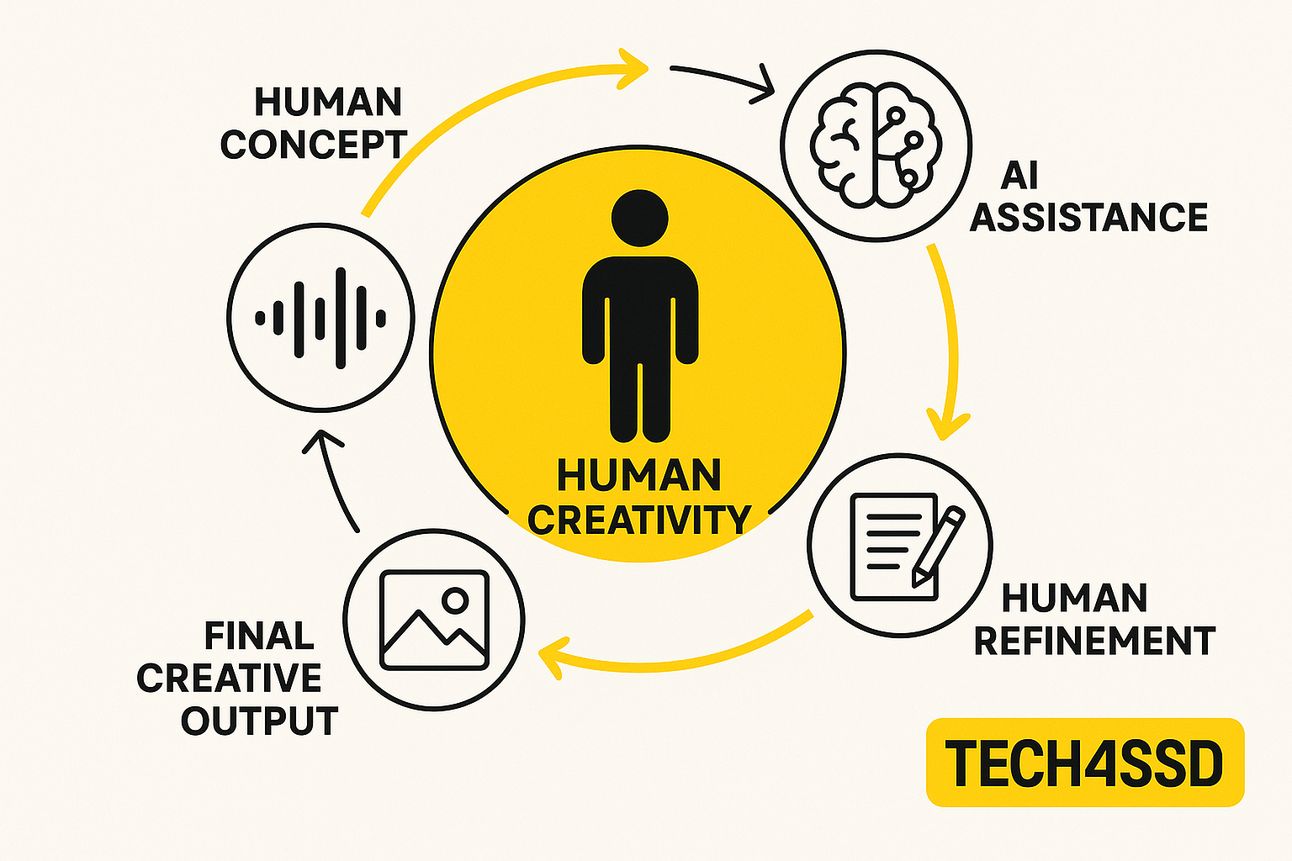

Section 5: The Path Forward — Co-Creation, Not Replacement

Rather than viewing AI as either a creative savior or an artistic apocalypse, the most productive path forward involves embracing AI as a collaborative tool that enhances rather than replaces human creativity. This approach requires thoughtful implementation, ethical guidelines, and a fundamental shift in how we think about the relationship between human and artificial intelligence.

AI as Collaborator, Not Creator

The most successful applications of AI in creative fields treat the technology as a sophisticated collaborator rather than an autonomous creator. This collaborative model preserves human agency and creativity while leveraging AI's unique capabilities for exploration, iteration, and technical execution.

Professional artists are already demonstrating how this collaboration can work. Concept artist Ian McQue uses AI tools to rapidly generate background elements and textures, which he then refines and integrates into his hand-painted illustrations [18]. This approach allows him to focus his creative energy on the most important aspects of his work while using AI to handle time-consuming technical tasks.

Musicians like Holly Herndon have pioneered AI collaboration by training systems on their own voices and compositions, creating AI "twins" that can generate new material in their style [19]. Rather than replacing human creativity, these AI collaborators expand the artist's creative palette and enable new forms of expression.

The key to successful AI collaboration lies in maintaining human creative control. The artist determines the creative direction, makes aesthetic decisions, and provides the conceptual framework that gives meaning to the work. AI contributes technical capabilities, rapid iteration, and the ability to explore vast possibility spaces.

How Human Voice, Intent, and Curation Still Matter

Despite AI's impressive technical capabilities, human creativity brings irreplaceable elements that no algorithm can replicate: intentionality, emotional depth, cultural context, and the ability to imbue work with personal meaning and social commentary.

Intentionality: Human artists create with purpose, whether to express personal experiences, comment on social issues, or explore philosophical questions. This intentionality gives art its power to move, challenge, and inspire audiences. AI systems, regardless of their sophistication, lack genuine intention.

Emotional Authenticity: The most powerful art emerges from human experience — love, loss, struggle, triumph, and the full spectrum of emotional life. While AI can simulate emotional expression, it cannot draw from genuine lived experience to create work that resonates with authentic feeling.

Cultural Context: Human artists are embedded in cultural contexts that inform their work in subtle but crucial ways. They understand social dynamics, historical references, and cultural sensitivities that AI systems, trained on decontextualized data, often miss or misinterpret.

Curation and Taste: Perhaps most importantly, human artists possess taste — the ability to recognize quality, make aesthetic judgments, and curate their output to achieve specific artistic goals. AI systems can generate vast quantities of content, but they lack the discernment to distinguish between good and great, meaningful and superficial.

Building Sustainable Creative Ecosystems

The long-term success of AI in creative fields depends on developing sustainable ecosystems that benefit both human artists and AI developers. This requires moving beyond the current zero-sum thinking that positions AI and human creativity as competitors.

Economic Models: New economic models must emerge that ensure human artists can benefit from AI development rather than being displaced by it. This might include revenue-sharing agreements, licensing systems, or new forms of creative collaboration.

Educational Integration: Art education must evolve to include AI literacy, helping emerging artists understand how to collaborate effectively with AI tools while maintaining creative autonomy.

Platform Responsibility: AI platforms have a responsibility to implement ethical guidelines, provide attribution mechanisms, and support the creative community that enables their technology.

Legal Frameworks: New legal frameworks must emerge that balance innovation with creator rights, providing clear guidelines for AI training, attribution, and compensation.

Practical Guidelines for Ethical AI Use

For creators interested in incorporating AI into their practice, here are practical approaches for ethical AI collaboration:

Train on Your Own Work: The most ethical approach involves training systems on your own creative output. This ensures the AI is learning from work you own and extending your personal style rather than appropriating others' creativity.

Use AI for Exploration, Not Final Output: Treat AI-generated content as a starting point for further development rather than finished work. Use AI to explore ideas, generate variations, and overcome creative blocks, but apply human judgment and refinement to create the final piece.

Maintain Creative Control: Always position yourself as the creative director of the collaboration. Make conscious decisions about what AI suggestions to accept, reject, or modify based on your artistic vision.

Be Transparent About AI Use: Clearly communicate when and how you've used AI in your creative process. This transparency builds trust with audiences and helps establish ethical norms for AI collaboration.

Respect Other Artists: Avoid using AI to directly mimic living artists' styles, especially for commercial purposes. If you're inspired by particular artists, find ways to acknowledge their influence and consider reaching out for permission or collaboration opportunities.

The future of creativity lies not in choosing between human and artificial intelligence, but in developing thoughtful collaborations that leverage the unique strengths of both approaches. By treating AI as a powerful tool for enhancing human creativity rather than replacing it, we can build a creative ecosystem that benefits artists, technologists, and society as a whole.

The key is ensuring that human creativity remains at the center of this collaboration — providing the intention, emotion, and meaning that transform technical capability into genuine art. When implemented thoughtfully and ethically, AI can democratize access to creative tools, accelerate artistic exploration, and enable new forms of expression that enrich our cultural landscape while respecting artists who make it possible.

📰 Top AI News Stories

🏛️ Legal Battles Heat Up

•August 2024: Artists score major victory against Stability AI, Midjourney, and DeviantArt in landmark copyright case

•March 2025: US appeals court rules AI-generated art cannot be copyrighted (lacking human creator)

•June 2025: Getty Images lawsuit against Stability AI continues in UK courts over 12 million allegedly stolen images

🛠️ Platform Updates

•Adobe Firefly: Expanding ethically-sourced AI training with new licensing partnerships

•Creative Commons: Launches "preference signals" allowing creators to opt-out of AI training

•Midjourney: Faces ongoing scrutiny over artist name usage in prompts (12,000+ uses of single artist's name discovered)

📊 Market Impact

•AI art market projected to reach $5.93 billion by 2032 (33.1% CAGR)

•67% of professional artists report concerns about AI impact on their livelihood

•New "ethical AI" platforms emerging with consent-based training models

P.S. What's your take on the AI creativity debate? Hit reply and let us know — we read every response and feature the best insights in future newsletters.

This email may contain links to tools we trust. Thanks for supporting Tech4SSD.

Tech4SSD | Building the future of creative technology

Reply